12cR2 ASM start fail when using multiple private interconnects, HAIP issue

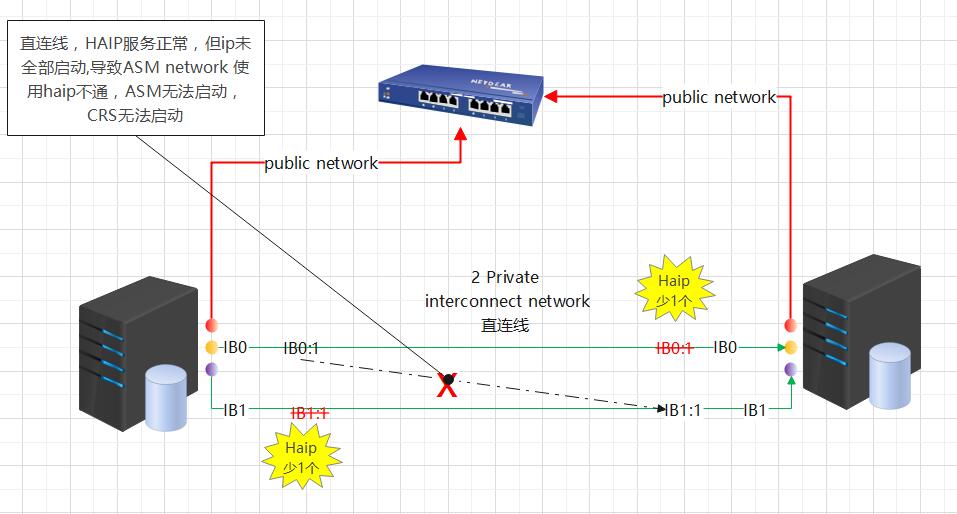

Oracle从11.2.0.2开始引入了一个新特性网络冗余技术HAIP。HAIP的目的用来代替操作系统级别的网卡绑定以实现Active-Active的模式进行数据传输。Oracle HAIP 支持多个私网,之前通常使用OS层的网卡绑定,但Oracle一直在希望使用自己的技术而不依赖其他,但HAIP存在较多bug,个人还是比较推荐OS bond网卡,这里简单记录一个案例,当使用2个HAIP网卡时,节点间HAIP 1缺失并且交叉在两个网卡上的,导致ASM无法启动。

ASM ALERT LOG

NOTE: remote asm mode is remote (mode 0x202; from cluster type) 2023-01-11T15:31:14.287419+08:00 Cluster Communication is configured to use IPs from: GPnP IP: 169.254.29.170 Subnet: 169.254.0.0 IP: 169.254.191.75 Subnet: 169.254.128.0 KSIPC Loopback IP addresses(DEF): 127.0.0.1 KSIPC Available Transports: UDP:TCP .... Warning: Oraping detected connectivity issues. An eviction is expected due environment issues OS ping to instance: 2 has failed. Please see LMON and oraping trace files for details. 2023-01-11T15:46:15.961104+08:00 LMON (ospid: 36421) detects hung instances during IMR reconfiguration LMON (ospid: 36421) tries to kill the instance 2 in 37 seconds. Please check instance 2's alert log and LMON trace file for more details. 2023-01-11T15:46:53.020074+08:00 Remote instance kill is issued with system inc 10 Remote instance kill map (size 1) : 2 LMON received an instance eviction notification from instance 1 The instance eviction reason is 0x20000000 The instance eviction map is 2 2023-01-11T15:46:53.587757+08:00 Reconfiguration started (old inc 10, new inc 12) List of instances (total 1) :

ohasd_orarootagent_root.trc

2023-01-11 15:52:47.980 : USRTHRD:1197451008: {0:5:3} HAIP: assigned ip '169.254.116.29'

2023-01-11 15:52:47.980 : USRTHRD:1197451008: {0:5:3} HAIP: check ip '169.254.116.29'

2023-01-11 15:52:47.980 : USRTHRD:1197451008: {0:5:3} HAIP: CleanDeadThreads entry

2023-01-11 15:52:47.980 : USRTHRD:1197451008: {0:5:3} HAIP: assigned ip '169.254.232.245'

2023-01-11 15:52:47.980 : USRTHRD:1197451008: {0:5:3} HAIP: check ip '169.254.232.245'

2023-01-11 15:52:47.980 : USRTHRD:1197451008: {0:5:3} Start: 2 HAIP assignment, 2, 1, 1, 1

2023-01-11 15:52:47.980 : USRTHRD:1197451008: {0:5:3} to verify wt, 1-2-2

2023-01-11 15:52:47.980 : USRTHRD:1197451008: {0:5:3} to verify inf event

2023-01-11 15:52:49.036 : USRTHRD:1194911488: HAIP: event GIPCD_METRIC_UPDATE

2023-01-11 15:52:49.036 : USRTHRD:1197451008: {0:5:3} dequeue change event 0x7f2728018290, GIPCD_METRIC_UPDATE

2023-01-11 15:52:49.036 : USRTHRD:1197451008: {0:5:3} InitializeHaIps[ 1] infList 'inf ib1, ip 11.11.11.3, sub 11.11.11.0'

2023-01-11 15:52:49.036 : USRTHRD:1197451008: {0:5:3} Network interface ib1 NOT functioning (bad rank -1, 20) from anbob1, HAIP to failover now

2023-01-11 15:52:49.036 : USRTHRD:1197451008: {0:5:3} InitializeHaIps[ 0] infList 'inf ib0, ip 10.10.10.3, sub 10.10.10.0'

2023-01-11 15:52:49.036 : USRTHRD:1197451008: {0:5:3} HAIP: updateCssMbrData for ib1:jzsjk1:0;ib0:jzsjk1:99;ib0:jzsjk2:99;ib1:jzsjk2:99, Threshold 20

2023-01-11 15:52:49.036 : USRTHRD:1197451008: {0:5:3} HAIP: enter UpdatecssMbrdata

2023-01-11 15:52:49.036 : USRTHRD:1197451008: {0:5:3} HAIP: BC to Update member info HAIP-RM1;10.10.10.0#0

2023-01-11 15:52:49.036 : USRTHRD:1197451008: {0:5:3} 1 - HAIP enable is 2.

2023-01-11 15:52:49.036 : USRTHRD:1197451008: {0:5:3} to verify routes

2023-01-11 15:52:49.036 : USRTHRD:1197451008: {0:5:3} to verify start completion 2

2023-01-11 15:52:49.036 : USRTHRD:1197451008: {0:5:3} HAIP: CleanDeadThreads entry

..

2023-01-11 16:00:07.005 : USRTHRD:171906816: {0:5:3} HAIP: to set HAIP

2023-01-11 16:00:07.005 : CLSINET:171906816: (:CLSINE0018:)WARNING: failed to find interface available for interface definition ib1(:.*)?:11.11.11.0

2023-01-11 16:00:07.006 : USRTHRD:171906816: {0:5:3} HAIP: number of inf from clsinet -- 1

2023-01-11 16:00:07.006 : USRTHRD:171906816: {0:5:3} HAIP: read grpdata, len 8

1, 使用private ip ping

2, 检查haip 是否所有private 网卡上都存在

3,使用haip互ping

4,ifconifg查看subnet是否和oifcfg iflist一致

5, 检查路由表

Known Issues: Grid Infrastructure Redundant Interconnect and ora.cluster_interconnect.haip (Doc ID 1640865.1)

HAIP BUG

Bug 10332426 – HAIP fails to start due to network mismatch

Bug 19270660 – AIX: category: -2, operation: open, loc: bpfopen:1,os, OS error: 2, other: ARP device /dev/bpf4, interface en8

Bug 16445624 – AIX: HAIP fails to start

Bug 13989181 – AIX: HAIP fails to start with: category: -2, operation: SETIF, loc: bpfopen:21,o, OS error: 6, other: dev /dev/bpf0

Note 1447517.1 – AIX: HAIP fails to start if bpf and other devices using same major/minor number

Bug 10253028 – “oifcfg iflist -p -n” not showing HAIP on AIX as expected

Bug 13332363 – Wrong MTU for HAIP on Solaris

Bug 10114953 – only one HAIP is create on HP-UX

Bug 10363902 – HAIP Infiniband support for Linux and Solaris

Bug 10357258 – Many HAIP started on Solaris IPMP – not affecting 11.2.0.3

Bug 10397652/ 12767231 – HAIP not failing over when private network fails – not affecting 11.2.0.3

Bug 11077756 – allow root script to continue upon HAIP failure

Bug 12546712 – not affecting 11.2.0.3

HAIP fails to start if default gateway is configured for VLAN for private network on network switch

Bug 12425730 – HAIP does not start, 11.2.0.3 not affected

ASM on Non-First Node (Second or Others) Fails to Start: PMON (ospid: nnnn): terminating the instance due to error 481

11gR2 GI HAIP Resource Not Created in Solaris 11 if IPMP is Used for Private Network

BUG 20900984 – HAIP CONFLICTION DETECTED BETWEEN TWO RAC CLUSTERS IN THE SAME VLAN

note 2059802.1 – AIX HAIP: failed to create arp – operation: open, loc: bpfopen:1,os, OS error: 2

note 2059461.1 – AIX: HAIP Fails to Start: operation: open, loc: bpfopen:1,os, OS error: 2

note 1963248.1 – AIX HAIP: operation: SETIF, loc: bpfopen:21,o, OS error: 6, other: dev /dev/bpf0

note 2066903.1 – operation: routedel, loc: system, OS error: 76, other: failed to execute route del, ret 256

Bug 29379299 – HAIP SUBNET FLIPS UPON BOTH LINK DOWN/UP EVENT

HAIP failover improvement

BUG 23472436 – HAIP USING ONLY 2 OF 4 NETWORK INTERFACES ASSIGNED TO THE INTERCONNECT

BUG 25073182 – ONE GIPC RANK FOR EACH PRIVATE NETWORK INTERFACE

Bug 24509481 – HAIP TO HAVE SMALLER SUBNET INSTEAD OF WHOLE LINK LOCAL 169.254.*.*

遇到这案例现状是HAIP服务启动正常,但ASM无法启动,因为haip节点前oraping不通过, 为IB硬件, 2个interconnect network做为私网并使用直连,配置不是推荐,奇怪的是每个节点只启动了一个haip,node1上在ib1, node2上在ib0, 因为两个是直连,所以分别ping ib上的private IP正常,但是HAIP在两个物理链路上无法ping通,导致ASM无法启动影响第二个启动的CRS无法启动。

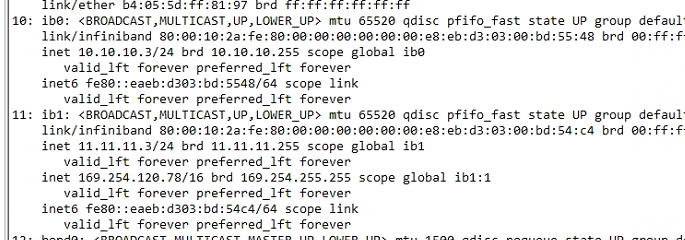

NODE1

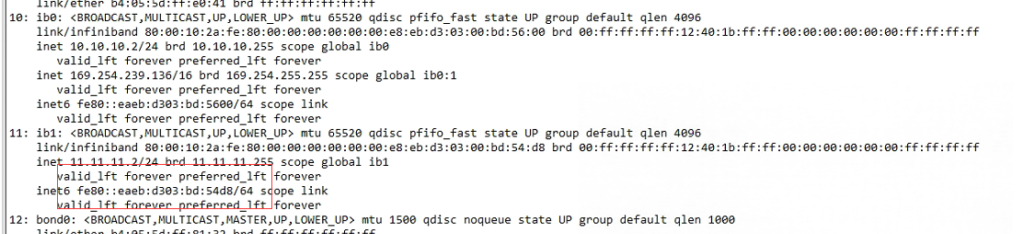

NODE2

Solution:

1,因为使用直连也无法bond(bond主备也会存在当node1 主ib0故障,而node2 主是ib1时,物理连路不通现象 ), 下面的重启顺序可以正常:

# node1

crsctl stop crs

# node2

crsctl stop crs

–启动了2个haip

# node1

crsctl start crs

–启动正常

2, 禁用HAIP

风险可能会影响以后的升级或新节点加入

1. Run "crsctl stop crs" on all nodes to stop CRS stack.2. 关闭HAIP

2. On one node, run the following commands:

$CRS_HOME/bin/crsctl start crs -excl -nocrs

$CRS_HOME/bin/crsctl stop res ora.asm -init

$CRS_HOME/bin/crsctl modify res ora.cluster_interconnect.haip -attr "ENABLED=0" -init

$CRS_HOME/bin/crsctl modify res ora.asm -attr "START_DEPENDENCIES='hard(ora.cssd,ora.ctssd)pullup(ora.cssd,ora.ctssd)weak(ora.drivers.acfs)',STOP_DEPENDENCIES='hard(intermediate:ora.cssd)'" -init

$CRS_HOME/bin/crsctl stop crs4. 进一步测试

3. Repeat Step(2) on other nodes.

4. Run "crsctl start crs" on all nodes to restart CRS stack.

3, 防止以后每次需要停所有节点,临时解决禁用了IB1 ,只使用ib0, 后续单节点重启正常(但interconnect work没有冗余环境)。比如

# as grid user $ oifcfg geif $oifcfg delif -global eth3/xxx.xxx.xx.0

— 需要重启所有节点上的CRS,不能以滚动方式使CRS重启

— 2023-7-30 update —

最近在另一套某银行 12.2 RAC 再次遇到了该问题,同样是2个IB 做为private netwrok, 幸存节点2只有1个haip 在IB0, 而重启节点1后,也只有一个haIp 在IB1,但不是直连线, 两个private IP 可以正常ping通。只是node1 重启后导致CRS 无法启动。

手动启ASM 实例

SQL> startup ORA-39510: CRs error performing start on instance '+ASM1' on '+ASM' CRS-2800:cannot start resource 'ORA.ASMNET1LSNR_ASM.lsnr' as it is already in the INTERMEDIATE state on server 'anbob1' CRS-0223:Resource "ora.asm 1 1' has placement error c]sr_start_resource:260 status:223 cIsrapi_start_asm:start_asmdbs status :223

crsctl stat res -t 可以看到ASMNET1LSNR_ASM是INTERMEDIATE状态, 手动重启ASMNET1LSNR启动失败, 我们把node1 的IB1 ifdown后,haip启动到IB0,截止当前两个node的haip都在IB0,但ASM依旧无法启动,但此时db instance可以启动,因为12c flex asm 使用Local db instance 可以使用remote ASM instance。此刻再ifup NODE1的IB1, 会触发NODE1 的ASMNET1LSNR启动,ASM instance启动成功,但是目前两个实例还分别只有1个HAIP,并且都在IB0上。

从db alert log看2年前db instance启动当时的haip 就只剩1个, 问题发生很久。从node 1的ohasd_orarootagent_root.trc以“suggestedHAIP”关键字grep

行 198516: 2023-07-24 11:00:41.757 : USRTHRD:671086336: {0:5:3} HAIP: i 0, haip 0, hag size 1, ip 192.168.2.x1, suggestedHAIP 169.254.172.104, assignedHAIP 169.254.172.104

行 198623: 2023-07-24 11:00:45.764 : USRTHRD:671086336: {0:5:3} HAIP: i 0, haip 0, hag size 1, ip 192.168.2.x1, suggestedHAIP 169.254.172.104, assignedHAIP 169.254.172.104

行 198907: 2023-07-24 11:00:49.446 : USRTHRD:671086336: {0:5:3} HAIP: i 0, haip 0, hag size 1, ip 192.168.2.x1, suggestedHAIP 169.254.172.104, assignedHAIP 169.254.172.104

行 198946: 2023-07-24 11:00:49.770 : USRTHRD:671086336: {0:5:3} HAIP: i 0, haip 0, hag size 1, ip 192.168.2.x1, suggestedHAIP 169.254.172.104, assignedHAIP 169.254.172.104

行 199025: 2023-07-24 11:00:53.460 : USRTHRD:671086336: {0:5:3} HAIP: i 0, haip 0, hag size 1, ip 192.168.2.x1, suggestedHAIP 169.254.172.104, assignedHAIP 169.254.172.104

行 199064: 2023-07-24 11:00:53.777 : USRTHRD:671086336: {0:5:3} HAIP: i 0, haip 0, hag size 1, ip 192.168.2.x1, suggestedHAIP 169.254.172.104, assignedHAIP 169.254.172.104

--- OS REBOOT

行 201422: 2023-07-24 12:59:19.518 : USRTHRD:4172297984: {0:5:3} HAIP: i 0, haip 0, hag size 2, ip 192.168.1.x1, suggestedHAIP 169.254.172.104, assignedHAIP

行 201496: 2023-07-24 12:59:19.622 : USRTHRD:4172297984: {0:5:3} HAIP: i 0, haip 0, hag size 2, ip 192.168.1.x1, suggestedHAIP 169.254.172.104, assignedHAIP

行 201515: 2023-07-24 12:59:19.623 : USRTHRD:4172297984: {0:5:3} HAIP: i 0, haip 0, hag size 2, ip 192.168.1.x1, suggestedHAIP 169.254.172.104, assignedHAIP

行 201567: 2023-07-24 12:59:21.215 : USRTHRD:4172297984: {0:5:3} HAIP: i 0, haip 0, hag size 2, ip 192.168.1.x1, suggestedHAIP 169.254.172.104, assignedHAIP

行 201662: 2023-07-24 12:59:23.757 : USRTHRD:4172297984: {0:5:3} HAIP: i 0, haip 0, hag size 2, ip 192.168.1.x1, suggestedHAIP 169.254.172.104, assignedHAIP

行 201701: 2023-07-24 12:59:25.214 : USRTHRD:4172297984: {0:5:3} HAIP: i 0, haip 0, hag size 2, ip 192.168.1.x1, suggestedHAIP 169.254.172.104, assignedHAIP

行 201778: 2023-07-24 12:59:31.769 : USRTHRD:4172297984: {0:5:3} HAIP: i 0, haip 0, hag size 2, ip 192.168.1.x1, suggestedHAIP 169.254.172.104, assignedHAIP

行 202031: 2023-07-24 12:59:35.773 : USRTHRD:4172297984: {0:5:3} HAIP: i 0, haip 0, hag size 2, ip 192.168.1.x1, suggestedHAIP 169.254.172.104, assignedHAIP 169.254.172.104

行 202097: 2023-07-24 12:59:37.230 : USRTHRD:4172297984: {0:5:3} HAIP: i 0, haip 0, hag size 2, ip 192.168.1.x1, suggestedHAIP 169.254.172.104, assignedHAIP 169.254.172.104

行 202179: 2023-07-24 12:59:39.779 : USRTHRD:4172297984: {0:5:3} HAIP: i 0, haip 0, hag size 2, ip 192.168.1.x1, suggestedHAIP 169.254.172.104, assignedHAIP 169.254.172.104

行 202220: 2023-07-24 12:59:41.235 : USRTHRD:4172297984: {0:5:3} HAIP: i 0, haip 0, hag size 2, ip 192.168.1.x1, suggestedHAIP 169.254.172.104, assignedHAIP 169.254.172.104

Note:

原来Node1 的1个haip也是在IB0 上( 同Node 2),只是在OS reboot后,HAIP CRS增加到了IB1上。

ASM lmon trace

Instance Information from Unique Table

inst 1 inc 0 uniq 0x9ece ver 12020001 state 0x10000000 (hub) [..:0.0]

inst 2 inc 0 uniq 0x7e7b56 ver 12020001 state 0x10000000 (hub) [..:0.0]

kjxgmpngchk: ping to inst 2 (169.254.42.176) failed with failure rate 1% (total 1 pings)

==============================

Instance Interface Information

==============================

num of pending ping requests = 0

inst 1 nifs 1 (our node)

real IP 169.254.172.104

inst 2 nifs 1 overall state (0x40:fail)

real IP 169.254.42.176 status (0x41:fail) elptm 0x0

Member Kill Requests

current time 0x64be4374 next killtm 0x0

nkills issued 0 queue 0x7f6ceaea91c0

Member information:

Inst 1, incarn 0, version 0x9ece, thrd -1, cap 0x3

prev thrd -1, status 0x0101 (J...), err 0x0000

valct 2

Inst 2, incarn 0, version 0x7e7b56, thrd -1, cap 0x0

prev thrd -1, status 0x0001 (J...), err 0x0000

valct 0

*** 2023-07-24T17:25:58.263647+08:00

================================

== System Network Information ==

================================

==[ Network Interfaces : 7 (7 max) ]============

lo | 127.0.0.1 | 255.0.0.0 | UP|RUNNING

ens7f0 | 10.83.4.81 | 255.255.255.0 | UP|RUNNING

ib0 | 192.168.2.x1 | 255.255.255.0 | UP|RUNNING

ib1 | 192.168.1.x1 | 255.255.255.0 | UP|RUNNING

ib1:1 | 169.254.172.104 | 255.255.0.0 | UP|RUNNING

team0 | 10.83.1.81 | 255.255.255.0 | UP|RUNNING

team0:1 | 10.83.1.83 | 255.255.255.0 | UP|RUNNING

===[ IPv4 Route Table : 6 entries ]============

Destination Gateway Iface

0.0.0.0/0 10.83.1.254 team0

10.83.1.0/24 0.0.0.0 team0

10.83.4.0/24 0.0.0.0 ens7f0

169.254.0.0/16 0.0.0.0 ib1

192.168.1.0/24 0.0.0.0 ib1

192.168.2.0/24 0.0.0.0 ib0

===[ ARP Table ]============

IP address HW type Flags HW address Mask Device

169.254.42.176 0x20 0x0 00:00:00:00:00:00:00:00:00 * ib1

192.168.1.86 0x20 0x2 20:00:03:07:fe:80:00:00:00 * ib1

192.168.1.85 0x20 0x2 20:00:05:07:fe:80:00:00:00 * ib1

169.254.169.254 0x20 0x0 00:00:00:00:00:00:00:00:00 * ib1

10.83.4.100 0x1 0x0 00:00:00:00:00:00 * ens7f0

10.83.1.82 0x1 0x2 d4:f5:ef:19:ec:48 * team0

10.83.4.145 0x1 0x0 00:00:00:00:00:00 * ens7f0

192.168.2.82 0x20 0x2 80:00:01:06:fe:80:00:00:00 * ib0

10.83.1.83 0x1 0x0 00:00:00:00:00:00 * team0

192.168.1.82 0x20 0x2 80:00:01:06:fe:80:00:00:00 * ib1

192.168.1.87 0x20 0x2 20:00:05:07:fe:80:00:00:00 * ib1

10.83.1.254 0x1 0x2 6c:e5:f7:da:f0:01 * team0

===[ Network Config : 10 devices ]============

eno1 .rp_filter = 1

eno2 .rp_filter = 1

ens5f0 .rp_filter = 1

ens5f1 .rp_filter = 1

ens7f0 .rp_filter = 1

ens7f1 .rp_filter = 1

ib0 .rp_filter = 2

ib1 .rp_filter = 2

lo .rp_filter = 0

team0 .rp_filter = 1

kjxgmpoll: verify the CGS reconfig state.

kjxgmpoll: dump the CGS communication info.

my inst 1 (node 0) inc (0 1) send-window 1000

flags 0xc100002 (..) ats-threshold 0

reg msgs: sz 504 blks 1 alloc 100 free 99

big msgs: sz 4112 blks 0 alloc 0 free 0

rcv msgs: sz 4112 blks 8 alloc 2000 free 0

total rcv msgs 0 size 0 avgsz 0 (in bytes)

total rcv bmsgs 0 embedded msgs 0 avg 0

total prc msgs 2 pending sends 1

conn[inst 1] fg 0x0 snds (2:0) qmsgs (0:0) bths (0:0) unas 0 unar 0:0 nreq 0 nack (0:0)

conn[inst 2] fg 0x0 snds (1:1) qmsgs (0:0) bths (0:0) unas 0 unar 0:0 nreq 0 nack (0:0)

kjxgfdumpco - Dumping completion objects stats:

kgxgt_dti - dumping stats for table: Completion objects table

size: 31 - health: UNDERUSED

kjxgfdumpco - Dumping completion objects:

kjxgmpoll: invoke the CGS substate check callbacks (tmout enabled).

kjxgmpngchk: 0 pending request

invoke callback kjxgrssvote_check (addr 0x9514380)

kjxgrssvote_check: IMR prop state 0x3 (verify)

kjxgrssvote_check_memverify: some instances have not sent MEMI yet

kjxgrssvote_check: wait-for inst map: 2

No reconfig messages from other instances in the cluster during startup. Hence, LMON is terminating the instance. Please check the network logs of this instance as well as the network health of the cluster for problems

2023-07-24 17:25:58.282 :kjzduptcctx(): Notifying DIAG for crash event

----- Abridged Call Stack Trace -----

ksedsts()+408<-kjzduptcctx()+868<-kjzdicrshnfy()+1113<-ksuitm_opt()+1678<-kjxgrssvote_check_memverify()+1844<-kjxgrssvote_check()+1187<-kjxgmccs()+2219<-kjxgmpoll()+3092<-kjxggpoll()+485<-kjfmact()+365<-kjfcln()+6477<-ksbrdp()+1079<-opirip()+609<-opidrv()+602

<-sou2o()+145<-opimai_real()+202<-ssthrdmain()+417<-main()+262<-__libc_start_main()+245

----- End of Abridged Call Stack Trace -----

虽然我们通过临时down/up其中IB卡的方式启动CRS, 但是已经找停机窗口重启所有节点CRS恢复正常2个HAIP。 基于这两个案例,建议后期对于多个private netwrok还是建议使用OS层bond的方式。

— over —

微信扫一扫,打赏作者吧~

微信扫一扫,打赏作者吧~

对不起,这篇文章暂时关闭评论。