最近某客户一套Oracle19c RAC 环境,在负载相对空闲时也面临一个常见的问题”log file sync”, 数据库存储已经是较快的SSD设备, 下面记录一下容易忽略的RAID配置,居然对数据库的影响如此之大的案例。

AWR 现象

Top 10 Foreground Events by Total Wait Time

| Event | Waits | Total Wait Time (sec) | Avg Wait | % DB time | Wait Class |

|---|---|---|---|---|---|

| log file sync | 1,730 | 929.9 | 537.53ms | 38.0 | Commit |

| DB CPU | 836.5 | 34.2 | |||

| db file sequential read | 74,417 | 255.5 | 3.43ms | 10.5 | User I/O |

| control file sequential read | 10,547 | 190.9 | 18.10ms | 7.8 | System I/O |

| Disk file operations I/O | 6,744 | 61.4 | 9.11ms | 2.5 | User I/O |

| gc cr block busy | 150 | 58.7 | 391.65ms | 2.4 | Cluster |

| Disk file Mirror Read | 2,536 | 52.1 | 20.53ms | 2.1 | User I/O |

| gc buffer busy acquire | 7,917 | 37.4 | 4.72ms | 1.5 | Cluster |

| read by other session | 3,342 | 29.4 | 8.79ms | 1.2 | User I/O |

| Sync ASM rebalance | 90 | 6.1 | 68.18ms | .3 | Other |

Wait Classes by Total Wait Time

| Wait Class | Waits | Total Wait Time (sec) | Avg Wait Time | % DB time | Avg Active Sessions |

|---|---|---|---|---|---|

| System I/O | 71,772 | 3,568 | 49.71ms | 145.9 | 0.3 |

| User I/O | 112,767 | 1,982 | 17.58ms | 81.1 | 0.2 |

| Other | 2,133,760 | 1,706 | 799.54us | 69.8 | 0.2 |

| Commit | 1,741 | 936 | 537.63ms | 38.3 | 0.1 |

| DB CPU | 837 | 34.2 | 0.1 | ||

| Cluster | 59,415 | 112 | 1.89ms | 4.6 | 0.0 |

| Application | 863 | 7 | 7.93ms | .3 | 0.0 |

| Concurrency | 37,936 | 4 | 92.57us | .1 | 0.0 |

| Administrative | 7 | 1 | 99.59ms | .0 | 0.0 |

| Network | 48,125 | 0 | 7.07us | .0 | 0.0 |

| Configuration | 65 | 0 | 1.79ms | .0 | 0.0 |

Host CPU

| CPUs | Cores | Sockets | Load Average Begin | Load Average End | %User | %System | %WIO | %Idle |

|---|---|---|---|---|---|---|---|---|

| 72 | 36 | 2 | 11.99 | 5.17 | 2.0 | 0.9 | 4.3 | 97.1

|

IO Profile

| Read+Write Per Second | Read per Second | Write Per Second | |

|---|---|---|---|

| Total Requests: | 12.9 | 11.5 | 1.3 |

| Database Requests: | 7.4 | 6.9 | 0.4 |

| Optimized Requests: | 0.0 | 0.0 | 0.0 |

| Redo Requests: | 0.6 | 0.0 | 0.6 |

| Total (MB): | 0.1 | 0.1 | 0.0 |

| Database (MB): | 0.1 | 0.1 | 0.0 |

| Optimized Total (MB): | 0.0 | 0.0 | 0.0 |

| Redo (MB): | 0.0 | 0.0 | 0.0 |

| Database (blocks): | 7.7 | 6.9 | 0.7 |

| Via Buffer Cache (blocks): | 7.7 | 6.9 | 0.7 |

| Direct (blocks): | 0.0 | 0.0 | 0.0 |

Note:

可见数据库的LOG FILE SYNC达500ms以上,并且CPU 负载相对空闲,IO吞吐量并不大,top event还有其它数据文件相关的I/O class wait event, 并且都是ms级别,这样的负载对于SSD存储通常应该就是在us级。

查看LOG FILE PARALLEL WRITE

Wait Event Histogram (up to 32 ms)

- Units for Total Waits column: K is 1000, M is 1000000, G is 1000000000

- Units for % of Total Waits: us is microseconds, ms is 1024 microseconds (approximately 1 millisecond)

- % of Total Waits: total waits for all wait classes, including Idle

- % of Total Waits: value of .0 indicates value was <.05%; value of null is truly 0

- Ordered by Event (only non-idle events are displayed)

| % of Total Waits | |||||||||

| Event | Waits 1ms to 32ms | <512us | <1ms | <2ms | <4ms | <8ms | <16ms | <32ms | >=32ms |

|---|---|---|---|---|---|---|---|---|---|

| control file parallel write | 213 | 67.1 | .9 | 3.9 | 1.1 | .2 | .2 | .3 | 26.4 |

| db file parallel write | 135 | 81.6 | .6 | 1.6 | .4 | .1 | .2 | .2 | 15.2 |

| log file parallel write | 272 | 69.0 | .9 | 1.7 | 1.0 | .2 | .2 | .3 | 26.7

|

查看当时的OSW IOSTAT信息

zzz ***Mon Jul 25 05:00:01 CST 2022

avg-cpu: %user %nice %system %iowait %steal %idle

2.55 0.00 2.64 25.02 0.00 69.79

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

sda 0.00 0.00 0.00 64.00 0.00 544.00 17.00 0.00 0.00 0.00 0.00 0.00 0.00

dm-0 0.00 0.00 0.00 13.00 0.00 64.00 9.85 0.00 0.00 0.00 0.00 0.00 0.00

dm-1 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

dm-2 0.00 0.00 0.00 51.00 0.00 480.00 18.82 0.00 0.00 0.00 0.00 0.00 0.00

sdb 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sdc 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

dm-3 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sdj 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sdk 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sdl 0.00 0.00 0.00 1.00 0.00 16.00 32.00 0.00 1.00 0.00 1.00 1.00 0.10

dm-7 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sdm 0.00 0.00 0.00 2.00 0.00 4.50 4.50 0.00 0.00 0.00 0.00 0.00 0.00

dm-8 0.00 0.00 0.00 3.00 0.00 20.50 13.67 0.00 0.33 0.00 0.33 0.33 0.10

sdn 0.00 0.00 0.00 0.00 0.00 0.00 0.00 1.16 0.00 0.00 0.00 0.00 68.50

sdo 0.00 0.00 1.00 1.00 0.50 4.00 4.50 0.93 288.50 338.00 239.00 346.00 69.20

dm-9 0.00 0.00 0.00 0.00 0.00 0.00 0.00 1.83 0.00 0.00 0.00 0.00 68.50

sdp 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.67 0.00 0.00 0.00 0.00 67.10

dm-10 0.00 0.00 2.00 1.00 4.50 4.00 5.67 2.17 293.00 320.00 239.00 230.67 69.20

sdr 0.00 0.00 1.00 0.00 4.00 0.00 8.00 1.24 302.00 302.00 0.00 656.00 65.60

sdw 0.00 0.00 0.00 2.00 0.00 9.00 9.00 1.39 522.00 0.00 522.00 500.00 100.00

dm-14 0.00 0.00 0.00 4.00 0.00 13.50 6.75 3.37 558.00 0.00 558.00 250.00 100.00

sdz 0.00 0.00 0.00 2.00 0.00 4.50 4.50 1.98 593.00 0.00 593.00 500.00 100.00

dm-15 0.00 0.00 1.00 1.00 8.00 4.00 12.00 1.94 523.00 787.00 259.00 500.00 100.00

sdaa 0.00 0.00 1.00 1.00 8.00 4.00 12.00 1.26 523.00 787.00 259.00 499.50 99.90

sdab 0.00 0.00 3.00 2.00 96.50 4.50 40.40 1.24 759.00 1000.00 397.50 62.00 31.00

sdac 0.00 0.00 34.00 1.00 352.00 4.00 20.34 0.94 71.83 73.94 0.00 9.00 31.50

dm-16 0.00 0.00 7.00 2.00 257.00 4.50 58.11 2.17 754.89 857.00 397.50 34.44 31.00

sdad 0.00 0.00 4.00 0.00 160.50 0.00 80.25 0.93 749.75 749.75 0.00 77.50 31.00

dm-17 0.00 0.00 69.00 1.00 768.00 4.00 22.06 1.87 78.81 79.96 0.00 4.57 32.00

sdaf 0.00 0.00 35.00 0.00 416.00 0.00 23.77 0.94 85.80 85.80 0.00 9.00 31.50

sdak 0.00 0.00 77.00 1.00 676.00 1.00 17.36 1.26 44.74 45.31 1.00 4.21 32.80

sdam 0.00 0.00 77.00 2.00 724.00 17.00 18.76 1.49 50.19 48.45 117.00 4.14 32.70

sdan 0.00 0.00 2.00 0.00 96.00 0.00 96.00 0.62 998.50 998.50 0.00 154.50 30.90

dm-21 0.00 0.00 154.00 3.00 1400.00 18.00 18.06 2.74 47.50 46.90 78.33 2.14 33.60

sdao 0.00 0.00 3.00 0.00 160.00 0.00 106.67 0.93 999.33 999.33 0.00 103.00 30.90

dm-22 0.00 0.00 5.00 0.00 256.00 0.00 102.40 1.54 999.00 999.00 0.00 61.80 30.90

Note:

可见I/O设备在R/W很小的情况下,await svctm %util相对比较高,问题应该是在OS下层存储端。

Megacli

在操作系统上可以安装使用megacli工具查看和配置RAID, 这里附几个常用命令,更多可查看wiki

Before we go through megacli commands we need to follow megacli concepts.

Adapter - The physical controller which we are going to use, represented by id (usually 0). Enclosure - The physical chassis the physical drives attached to, represented by id, such as 254,252 etc. Physical Drives - Physical Hard Disks attached to controller, represented by id, 0,1,2,3 etc. Virtual Drives - Those drives contains Physical Drives and equal to Raid Devices, represented by id, 0,1,2,3 etc.

Gather info about raid controller Make sure you have raid controller attached to your server, using lspci.

# lspci | grep -i raid Output example: 81:00.0 RAID bus controller: LSI Logic / Symbios Logic MegaRAID SAS 2208 [Thunderbolt] (rev 05)

Gather info about raid adapters:

# megacli -AdpGetPciInfo -aAll Output example: PCI information for Controller 0 -------------------------------- Bus Number : 5 Device Number : 0 Function Number : 0 Exit Code: 0x00

We can see here the adapters information, we will use the adapter id later on, adapter id is 0.

Gather info about the enclosure

# megacli -EncInfo -a0

Gather info about physical drives

Adapter id is 0.

# megacli -LdPdInfo -a0

Gather info about Virtual drives

# megacli -LDInfo -Lall -a0

Gather info about raid level

# megacli -LDInfo -L2 -a0 | grep -i raid Output example: RAID Level : Primary-1, Secondary-0, RAID Level Qualifier-0

We can see here Primary raid level 1 and secondary 0. It means actually raid 0 over raid 1 that is RAID 10.

Cache Policy

Cache Policy’s are how the raid card uses on board RAM to collect data before writing out to disk or to read data before the system asks for it.

WriteBack uses the card’s cache to collect enough data to make a series of long sequential writes out to disk. This is the fastest write method.

WriteThrough tells the card to write all data directly to disk without cache. This method is quite slow by about 1/10 the speed of WriteBack, but is safer as no data can be lost that was in cache when the machine’s power fails.

ReadAdaptive uses an algorithm to see if when the OS asks for a bunch of data blocks sequentially, if we should read a few more sequential blocks because the OS _might_ ask for those too. This method can lead to good speed increases.

ReadAheadNone tells the raid card to only read the data off the raid disk if it was actually asked for. No more, no less.

Cached allows the general use of the cards cache for any data which is read or written. Very efficient if the same data is accessed over and over again.

Direct is straight access to the disk without ever storing data in the cache. This can be slow as any I/O has to touch the disk platters.

# megacli -LDInfo -Lall -aALL Adapter 0 -- Virtual Drive Information: Virtual Drive: 0 (Target Id: 0) Name : RAID Level : Primary-1, Secondary-0, RAID Level Qualifier-0 Size : 1.090 TB Sector Size : 512 Is VD emulated : No Mirror Data : 1.090 TB State : Optimal Strip Size : 64 KB Number Of Drives : 2 Span Depth : 1 Default Cache Policy: WriteBack, ReadAhead, Direct, No Write Cache if Bad BBU Current Cache Policy: WriteBack, ReadAhead, Direct, No Write Cache if Bad BBU Default Access Policy: Read/Write Current Access Policy: Read/Write Disk Cache Policy : Disk's Default Encryption Type : None Default Power Savings Policy: Controller Defined Current Power Savings Policy: None Can spin up in 1 minute: Yes LD has drives that support T10 power conditions: Yes LD's IO profile supports MAX power savings with cached writes: No Bad Blocks Exist: No Is VD Cached: No Exit Code: 0x00

对于SSD 存储的RAID配置时建议使用Writethrough, ReadAheadNone, Direct, nocache. 因为SSD已经性能远优于HDD,默认的RAID更适用于HDD, 不用预读到RAID 控制器的CACHE, 因为SSD内部CACHE已足够强大,并且是iops也并非瓶颈,使用预读是按strip size预读,以于Oracle DB随机IO 和多会话,这种情况readahead一般不会有什么性能改善,我认为还可能会有读放大。

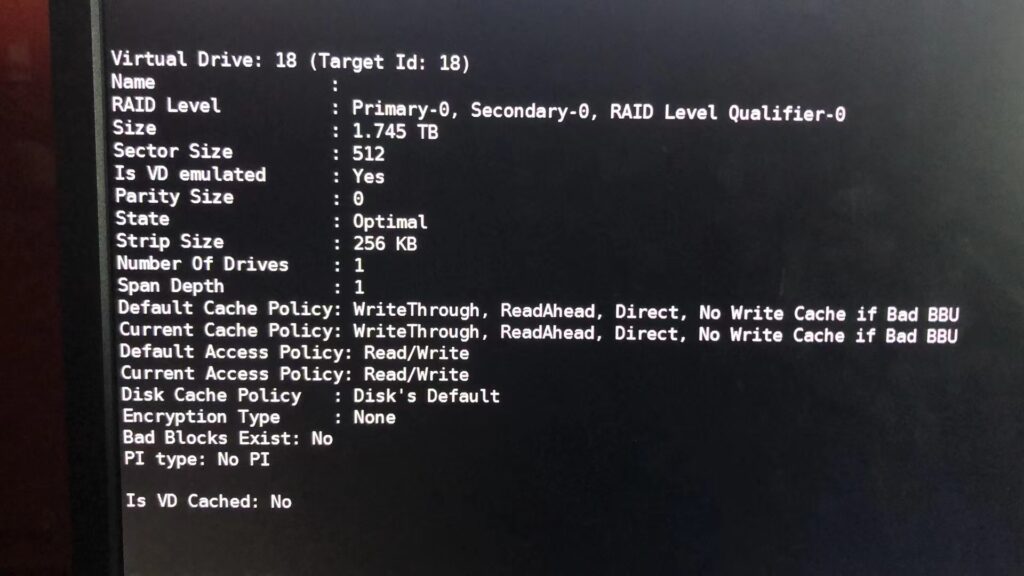

我们看一下这套数据库的RAID配置

Configure RAID set:

./MegaCli -cfgLdAdd -r0 [32:2] [WT] [NORA] [DIRECT] -a0

Where:

r0 = RAID type (r0, r1, r5, etc)

32:2 = HBD ID 32 (Default) and after colon is actual disk# as installed in the backplane.

WT = Write through (can also be WB for Write Back)

NORA = No Read Ahead (can also be Read Ahead (RA) or Adapative Read Ahead (ADRA))

DIRECT = Disk Cache Policy of Disabled (can also be Cached to enable Disk Cache Policy)

a0 = Controller ID

Configure Virtual Disk name:

./MegaCli -LDSetProp -Name SSD_Cache_Disk1 -L1 -a0

Where:

SSD_Cache_Disk1 = Name of the virtual disk, cannot have spaces or quotes

L1 = Virtual Disk volume that is going to be labeled.

a0 = Controller ID

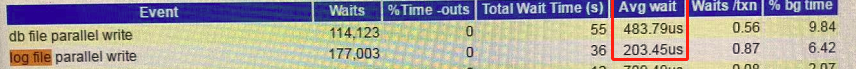

禁用了ReadAhead功能后效果

$ megacli -LDSetProp NORA -L 1,2,3 -aall

Note:

log file parallel write恢复到us级正常水平, 一切IO都有所改善,I/O性能问题消失。

References

Megacli wiki

https://raid.wiki.kernel.org/index.php/Hardware_Raid_Setup_using_MegaCli

AID Stripe Size and filesystem Readahead

https://ioflood.com/blog/2021/02/04/optimal-raid-stripe-size-and-filesystem-readahead-for-raid-10/